Plug-and-Play Diffusion Features for Text-Driven Image-to-Image Translation

Supplementary Material

- Our Results

- Comparisons to Baselines

- Additional Qualitative Comparisons

- Diffusion Features PCA Visualizations

We recommend watching all images in full screen. Click on the images for seeing them in full scale.

Our Results

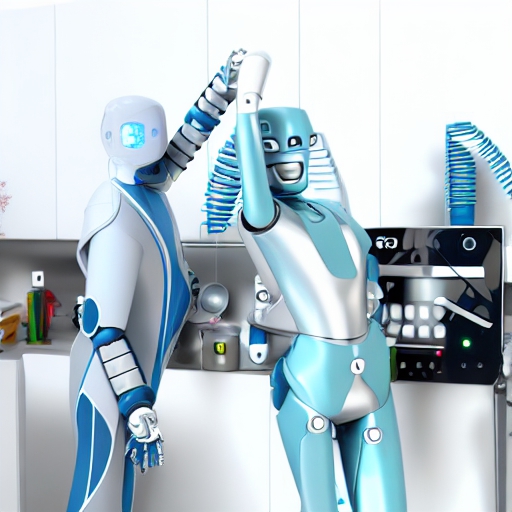

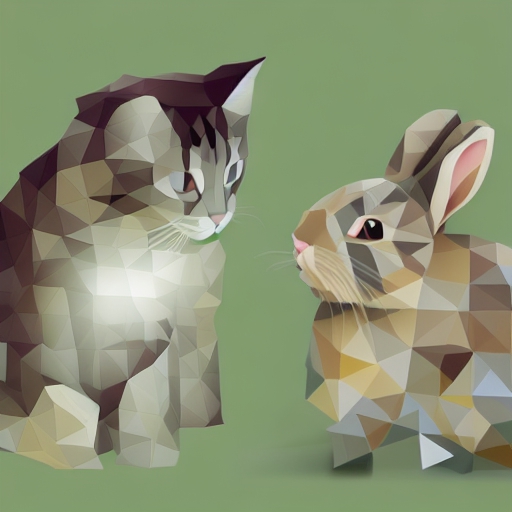

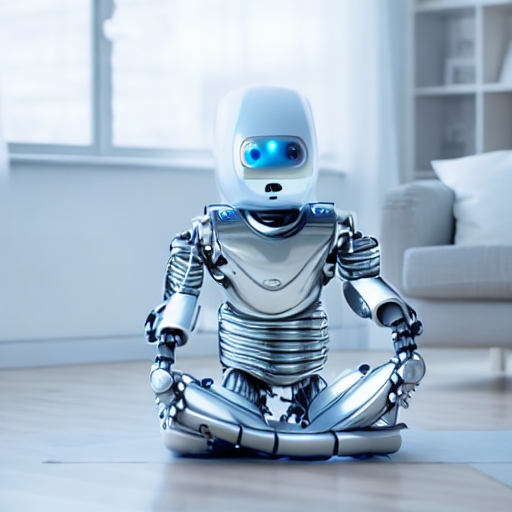

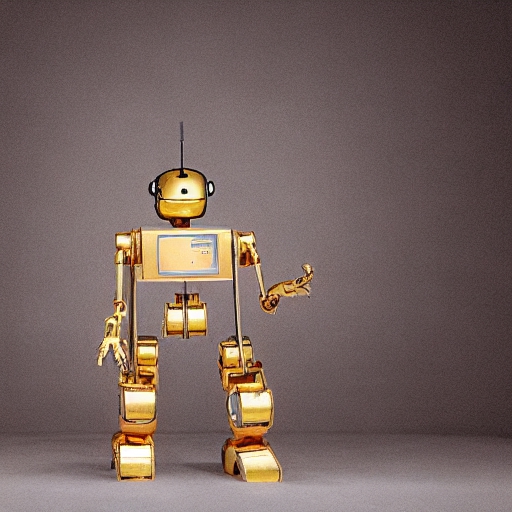

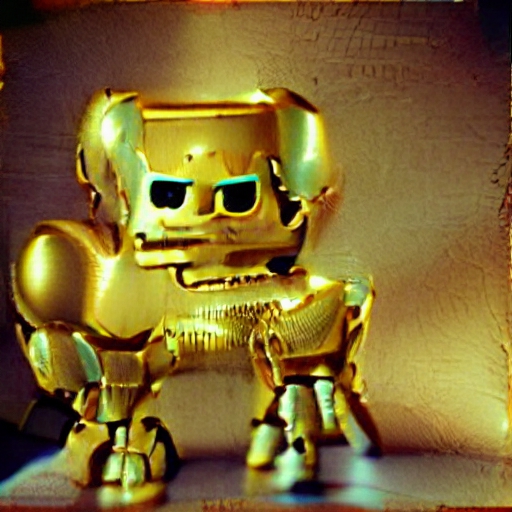

We present sample results of our method on 3 benchmarks - Wild TI2I - Real Images, Wild TI2I - Generated Images, and ImageNet-R TI2I (See the Supplementary PDF and the paper for details). Our method preserves the structure of the guidance image while matching the description of the target prompt.

Wild TI2I - Real images

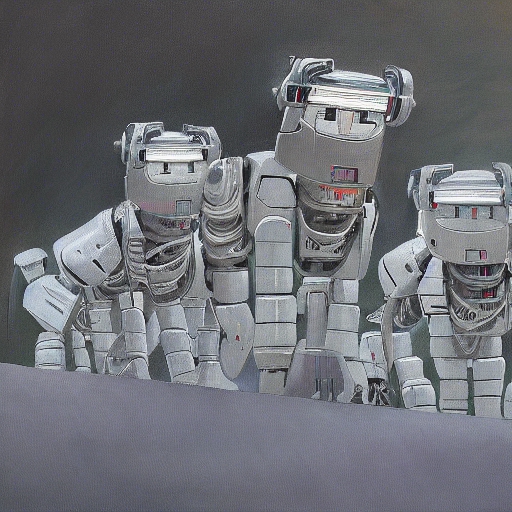

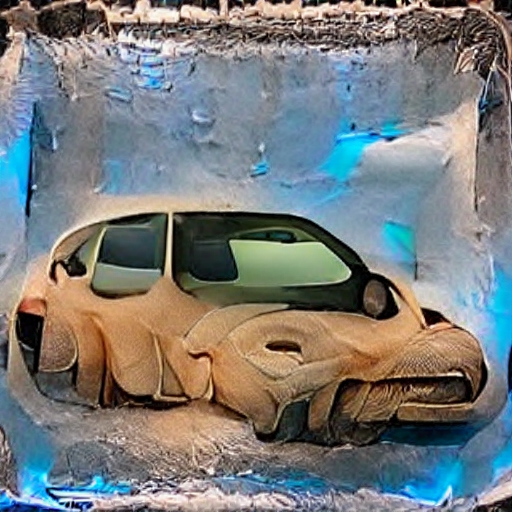

Comparisons to Baselines

Existing methods of text-guided image translation suffer from a trade-off between structure preservation and target text fidelity.

- SDEdit ([1]) suffers from appearance leakage when preserving the structure and deviates from the structure when using a higher noising step for appearance deviation.

- Prompt-to-Prompt ([2]) achieves text fidelity but does not preserve the details in the structure of the guidance image.

- DiffuseIT ([3]) preserve the guidance image structure but fails to fulfill the target text.

- VQ-GAN + CLIP ([4]) fails to preserve the original image structure and produces artifacts.

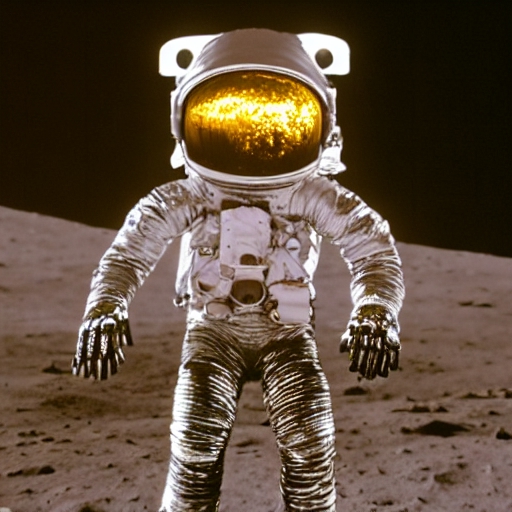

Additional Qualitative Comparisons

We present additional qualitative comparisons to Text2LIVE, DiffusionCLIP and FlexIT. These methods either fail to deviate from the guidance image or create undesired artifacts. We note that Text2LIVE is limited to performing only textural edits and cannot apply structural changes necessary to convey the target edit (e.g. dog to bear, church to asian tower). Moreover, Text2LIVE does not leverage a strong generative prior, hence producing low visual quality.

| "a photo of a yorkshire terrier" | Text2LIVE ([5]) | DiffusionCLIP ([6]) | FlexIT ([7]) | Ours |

|---|---|---|---|---|

|

|

|

|

|

| "a photo of a bear" | Text2LIVE | DiffusionCLIP | FlexIT | Ours |

|

|

|

|

|

| "a photo of Venom" | Text2LIVE | DiffusionCLIP | FlexIT | Ours |

|

|

|

|

|

| "a photo of a golden church" | Text2LIVE | DiffusionCLIP | FlexIT | Ours |

|

|

|

|

|

| "a photo of an Asian ancient tower" | Text2LIVE | DiffusionCLIP | FlexIT | Ours |

|

|

|

|

|

| "a photo of a wooden house" | Text2LIVE | DiffusionCLIP | FlexIT | Ours |

|

|

|

|

|

[1] Chenlin Meng, Yang Song, Jiaming Song, Jiajun Wu, Jun-Yan Zhu, and Stefano Ermon. Sdedit: Image synthesis and editing with stochastic differential equations. arXiv preprint arXiv:2108.01073, 2021.

[2] Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. Prompt-to-prompt image editing with cross attention control. arXiv preprint arXiv:2208.01626, 2022.

[3] Gihyun Kwon and Jong Chul Ye. Diffusion-based image translation using disentangled style and content representation. arXiv preprint arXiv:2209.15264, 2022.

[4] Katherine Crowson, Stella Biderman, Daniel Kornis, Dashiell Stander, Eric Hallahan, Louis Castricato, and Edward Raff. Vqgan-clip: Open domain image generation and editing with natural language guidance. In European Conference on Computer Vision, pages 88–105. Springer, 2022.

[5] Omer Bar-Tal, Dolev Ofri-Amar, Rafail Fridman, Yoni Kasten, and Tali Dekel. Text2live: Text-driven layered image and video editing. In European Conference on Computer Vision. Springer, 2022.

[6] Gwanghyun Kim, Taesung Kwon, and Jong Chul Ye. Diffusionclip: Text-guided diffusion models for robust image manipulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022.

[7] Guillaume Couairon, Asya Grechka, Jakob Verbeek, Holger Schwenk, and Matthieu Cord. Flexit: Towards flexible semantic image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 18270–18279, 2022.